KwarqsDashboard is an award-winning control system developed in 2013 for FIRST Robotics Team 2423, The Kwarqs. For the second year in a row, Team 2423 won the Innovation in Control award at the 2013 Boston Regional. The judges cited this control system as the primary reason for the award.

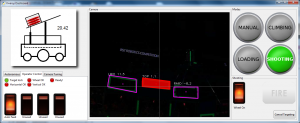

It is designed to be used with a touchscreen, and robot operators use it to select targets and fire frisbees at the targets. Check out the shiny screenshot below:

There are a lot of really useful features that we built into this control interface, and once we got our mechanical and electrical bugs ironed out the robot was performing quite well. We’re looking forward to competing with it at WPI Battle Cry 2013 in May! Here’s a list of some of the many features it has:

There are a lot of really useful features that we built into this control interface, and once we got our mechanical and electrical bugs ironed out the robot was performing quite well. We’re looking forward to competing with it at WPI Battle Cry 2013 in May! Here’s a list of some of the many features it has:

- Written entirely in Python

- Image processing using OpenCV python bindings, GUI written using PyGTK

- Cross platform, fully functional in Linux and Windows 7/8

- All control/feedback features use NetworkTables, so the same robot can be controlled using the SmartDashboard instead if needed

- SendableChooser compatible implementation for mode switching

- Animated robot drawing that shows how many frisbees are present, and tilts the shooter platform according to the current angle the platform is actually at.

- Allows operators to select different modes of operation for the robot using brightly lit toggle switches

- Operators can choose an autonomous mode on the dashboard, and set which target the robot should aim for in modes that use target tracking

- Switches operator perspective when robot switches modes

- Simulated lighted rocker switches to activate robot systems

- Logfiles written to disk with errors when they occur

Target acquisition image processing features:

- Tracks the selected targets in a live camera stream, and determines adjustments the robot should make to aim at the target

- User can click on targets to tell the robot what to aim at

- Differentiates between top/middle/low targets

- Partially obscured targets can be identified

- Target changes colors when the robot is aimed properly

Fully integrated realtime analysis support for target acquisition:

- Â Adjustable thresholding, saves settings to file

- Enable/disable drawing features and labels on detected targets

- Show extra threshold images

- Can log captured images to file, once per second

- Can load a directory of images for analysis, instead of connecting to a live camera

So, as you can see, lots of useful things. We’re releasing the full source code for it under a GPL license, so go ahead, download it, play with it, and let me know what you think! Hope you find this useful.

A lot of people made this project possible:

- Some code structuring ideas and PyGTK widget ideas were derived from my work with Exaile

- Team 341 graciously open sourced their image processing code in 2012, and the image processing is heavily derived from a port of that code to python.

- Sam Rosenblum helped develop the idea for the dashboard, and helped refine some of the operating concepts.

- Stephen Rawls helped refine the image processing code and distance calculations.

- Youssef Barhomi created image processing stuff for the Kwarqs in 2012, and some of the ideas from that code were copied.

The included images were obtained from various places:

- Linda Donoghue created the robot image

- The fantastic lighted rocker switches were created by Keith Sereby, and are distributed with permission.

- The green buttons were obtained via google image search, I don’t recall where